Recently, Sportch enlisted Arc2’s fractional c-suite services. Sportch is the award-winning iOS and Android app, Sportch, a social competition platform for sports.

As acting CTO, my mandate was to assess the codebase, architecture, and ecosystem for risk, scalability, and efficiency — and then to provide a plan to stabilise the platform and position it for sustained growth, stability and speed to market.

This article outlines four major infrastructure changes I implemented — now live — and the measurable impact of those decisions.

A) Cloud exit

B) Adopting a modern webserver stack

C) Replacing the application service layer for raw performance

D) Customised devops workflow

A) Cloud exit

Cloud hosting offers scalability, which for enterprise translates as convenience, but at a premium. According to Flexera’s 2024 State of the Cloud Report, 82% of enterprises report cloud overspend — primarily due to underutilisation and service sprawl.

Sportch was build on an expensive and poorly implemented GCP compute engine. There were multiple single points of failure. In addition managing the instance was difficult because the GCP UI is highly complex. The app was using maybe 1% of the features that GCP provides. Access to instances on GCP directly is fraught with hurdles, because of the complexities inherent in providing secure cloud-based CLI access. Thus control over the environment was hobbled.

Having evaluated the size of the user-base, the load on the app, the costs involved and the complexity of the ecosystem, we determined that the enterprise cloud was actually not a good fit for this app. Sportch was getting the worst of both worlds and it was clear that the original decision towards using the cloud was taken based on trends rather than on any kind of analysis.

We migrated to an unmanaged VPS with a smaller hosting company that could provide better customer service (GCP customer service is unaffordable for boostrapped startups), a solid redundancy offering and flat-rate billing. This restored clarity and predictability.

The improved CLI access and control provided by the self-managed VPS environment also provided an opportunity to update various dependencies including replacing SysVinit with SystemD, which provided better stability for websocket services.

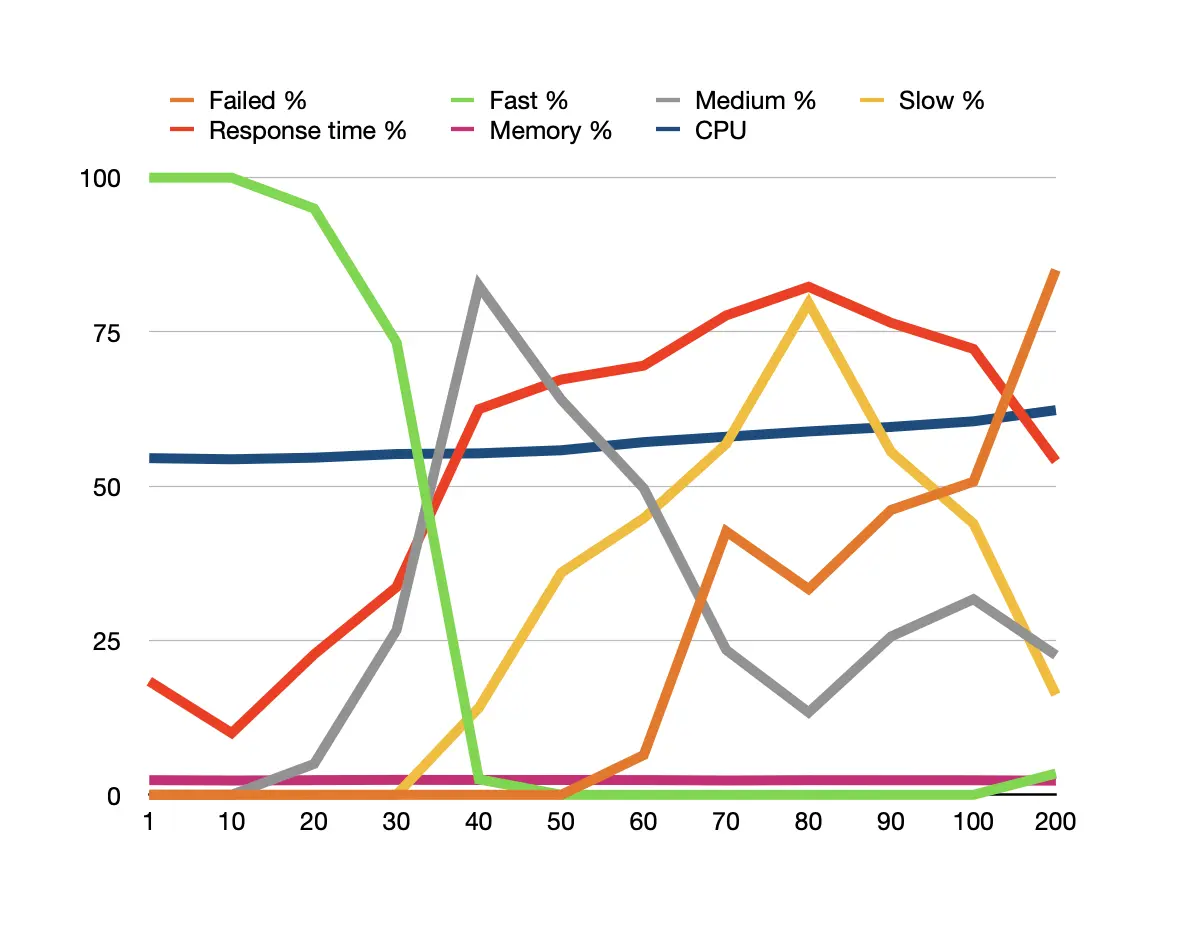

Now, you might be thinking, “But a VPS won’t scale”. And you’re not wrong. While it’s quite easy to upgrad a VPS to add more memory and cores, this doesn’t always address scaleability. For example our benchmarks for the Sportch app on the new stack show that as we ramp up concurrent requests, the bottleneck is not the CPU nor the Memory, which were well below capacity by the time our requests start timing out.

The actual bottleneck is the disk i/o related to SQL queries. And that’s due to very complex and heavy queries required to serve the app.

Concurrent request performance

There’s another blog article explaining how we did these benchmarks:

Blog post not found: server-performance-metrics-and-benchmarksSo the key to scalability for this app is more about optimising the database queries and we’d likely look to scale horizontally using a system like Vitess to shard the database while providing a load-balanced pod cluster for the PHP app via Kubernetes (the docker containers already exist in the dev environment via FLMP as described later in this article), in which case we would be moving back into the cloud. But there’s no need to do that until there’s a need to do that.

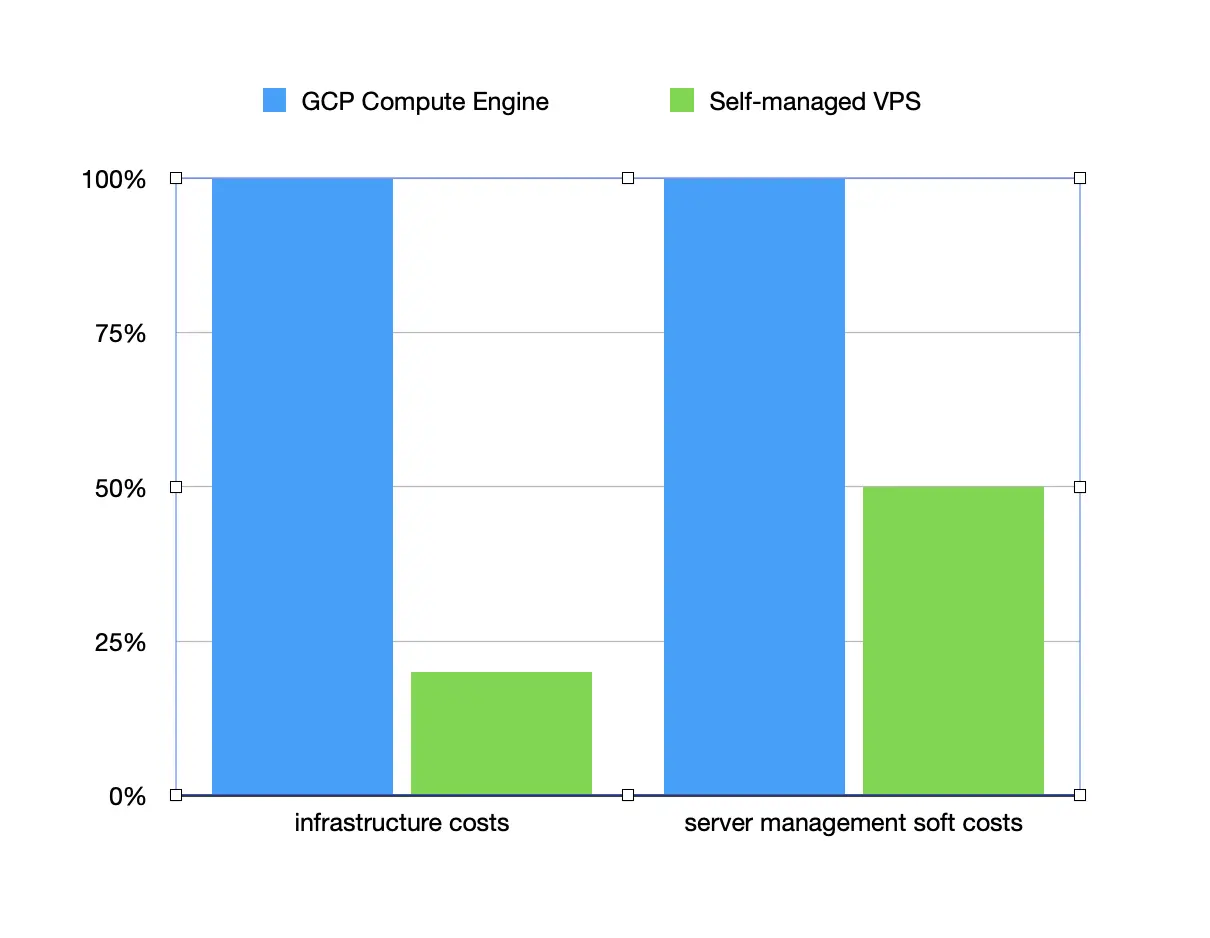

Results:

- ~80% lower infrastructure costs

- ~50% lower server management soft costs

Cloud vs VPS Performance Comparison

Why it worked:

The move aligned with post-cloud decentralisation trends and cut latency from provider-side abstractions. It also enabled better CLI control, system-level monitoring, improved redundancy and full-stack reproducibility.

B) A Modern Webserver

Another problem facing Sportch was that app was slow and unreliable with some key services requiring weekly manual restarts.

Apache’s long legacy made it a default — but not ideal. In benchmarks by Stack Overflow, Apache performs significantly worse under concurrent load versus newer webservers.

We replaced it with Caddy, which offers:

- Auto HTTPS (via Let’s Encrypt)

- HTTP/3 support

- Minimal config

- Inbuilt reverse proxy + load balancing

- Blazing fast speeds

It’s fast, secure, and extremely low-maintenance – perfect for modern CI/CD workflows.

We packaged it as a reusable Docker stack: CLMP GitHub Repo. Arc2 developed this independently as an Open Source library which we offer to the PHP development community and we use it in our PHP dev workflow as a replacement for the ubiquitous WAMP / XXAMP / MAMP toolset.

This provided consistency across developer environments and platforms. Having better control over the application hosting environment also enabled us to align the dev and production environments precisely.

C) A More Modern Application Service Layer

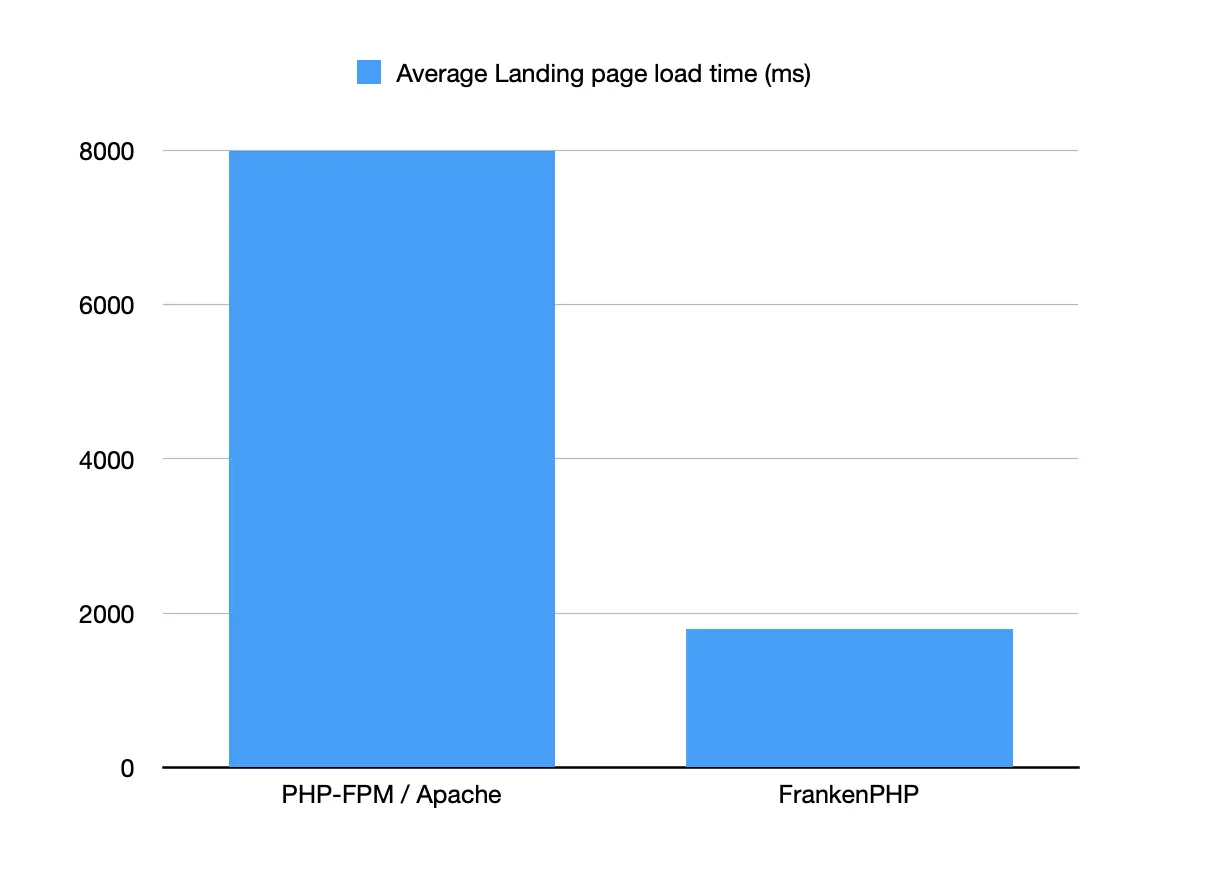

PHP-FPM is still common, but slow. It forks a process per request, introducing latency and memory overhead — especially for high-frequency APIs.

FrankenPHP changes that. It embeds the PHP runtime into a Go binary, enabling persistent workers and shared memory.

In FrankenPHP benchmarks, FrankenPHP handles:

- Up to 4x more requests/sec

- ~3x lower latency than FPM

- Better memory efficiency under load

We rewired the stack to integrate FrankenPHP, which is built as a Caddy webserver module and comes with that lightning-fast webserver built in. There are some pitfalls to this, notably that FrankenPHP does not offer a CLI environment. However, this can be mitigated by installing PHP separately on the host machine, and ensuring a version match with the FrankenPHP build.

Building FrankenPHP for a particular environment and setup is complex and involved a good deal of R&D to make it a good fit for general PHP developer and deployment workflows. I’ve encapsulated this knowledge in another Open Source repository, which is offered freely to the PHP dev community. It can be found here: https://github.com/arc2digital/FLMP. This is similar to CLMP but implements FrankenPHP instead of Caddy / PHP-FPM. It includes a custom Dockerfile which distills the R&D learnings into a single file which you should be able to edit for your environment. Alma Linux was selected as the distro because of its deep stability and security focus, which makes it an excellent fit for most web app use cases.

I’ll be writing up an FLMP Tutorial in a future blog post – Blog post not found: FLMP-tutorial

Results:

- ~400% faster load times

- ~25% lower server load

Request Throughput Under Load

Application response times and stability improved, and mobile user experience became noticeably faster.

D) Customised DevOps Workflow

Consultation with the legacy dev team revealed a major bottleneck: full deployments were taking up to a week. The process was manual, poorly documented, multi-stage, and spanned several platforms — rife with opportunities for error.

We began by establishing a wiki-based knowledge base to preserve institutional knowledge. This was urgent, as the only person with a full understanding of the deployment process was suffering from a brain tumour and could only communicate in short, low-stress sessions.

To handle this respectfully and effectively, we implemented the following strategy:

- Encouraged the individual to contribute process notes to the wiki at their own pace

- Conducted structured interviews to extract and record key knowledge

- For undocumented internal code logic, we built an AI-assisted agent trained on the codebase using embedding and context engineering. This allowed us to query architectural logic and generate artifacts such as sequence diagrams on demand

We also introduced GitHub (free tier), which brought:

- Cloud-based redundancy to protect IP

- Integrated version control for better collaboration

- CI/CD pipeline support via GitHub Actions

While GitHub Actions is powerful, its default pipelines can be slow. To avoid productivity loss, we implemented our own custom CI/CD workflow that uses Git intelligently to reduce build times without sacrificing security or reliability.

Results:

- Full deployment time reduced from ~7 days to ~10–15 seconds

- Stable, repeatable builds

- Zero external dependencies

We offer this workflow as a standard to all Arc2 clients.

Lastly, to support scalability within a VPS environment, we developed a shell script for provisioning new environments in under 15 minutes. This eliminates vendor lock-in and enables rapid up/down scaling in response to changing needs.

This complete DevOps overhaul restored velocity, reduced fragility, and established modern development hygiene from the ground up.

Results:

- Deployment time reduced by ~75%

Conclusion

We didn’t chase trends — we simplified, stabilised, and focused on what the business needed: speed, reliability, and cost reduction:

- Cut cloud bills by over half

- Improved API speed by 4x

- Enabled fast, reliable automated deployment

- Improved security and resilience